|

SD ID: EPOS/VERCE Virtual Earthquake and Computational Earth Science e-science environment in Europe Organisations & Contacts: Andreas Rietbrock, University of Liverpool Alessandro Spinuso, KNMI André Gemünd, Fraunhofer SCAI Giuseppe La Rocca, EGI Foundation Michael Schuh, DESY |

OVERVIEW: The demonstrator democratises access to HPC simulation codes, data and workflows for the evaluation of Earth models. It is implemented through a science gateway and offers reproducibility and provenance services for monitoring, validation and reuse of the data produced during the different phases of the analysis.VERCE’s strategy is to build upon a service-oriented architecture and a data-intensive platform delivering services, workflow tools, and software as a service, and to integrate the distributed European public data and computing infrastructures (GRID, HPC and CLOUD) with private resources and the European integrated data archives of the seismology community.

SCIENTIFIC OBJECTIVES OF THE DEMONSTRATOR:

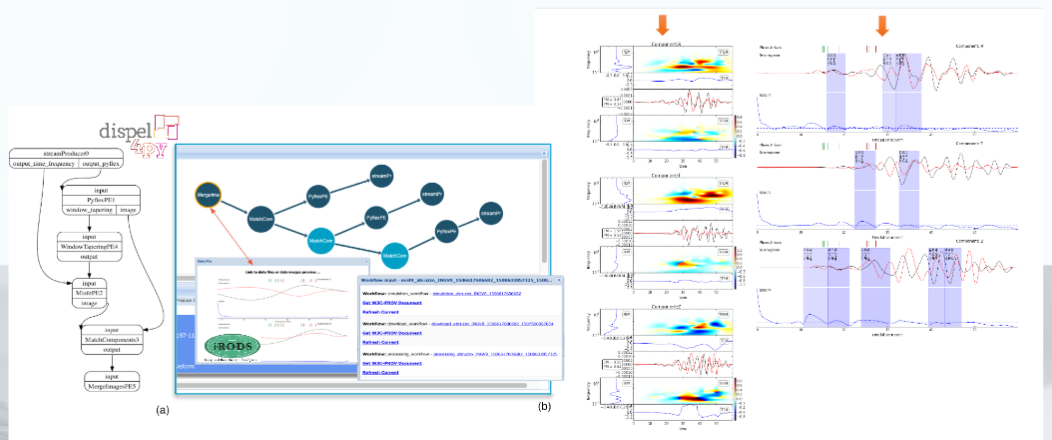

We aim at enhancing the services of the VERCE portal and integrate the EGI FedCloud Infrastructure as the main data-intensive computational service provider for Misfit Analysis Workflows. These are involved in the postprocessing of the synthetic results in combination with observational data accessed from the FDSN network. The workflows use authorisation delegation mechanisms to connect to remote data-stores and web services and produce provenance data. The mechanism to manage such functionalities should work seamlessly across different resources and networks.

- Readiness for FedCloud integration

- Post-processing workflows on Cloud resources to be available

- Integration of B2STAGE between HPC/Cloud.

- Resources and self-managed iRODS instance completed.

- Improvement of the provenance model and associated reproducibility and validation services done.

- First test with large data post-processing and concurrent users successful realized on the Cloud

MAIN ACHIEVEMENTS

The Forward Modeling tool hosted by the gateway has been extended with an additional simulation code (SPECFEM3D_GLOBE). The front-end code was also upgraded to Liferay 6.2 to be compliant with gUSE 3.7.5. The integration of 3D virtual globe and with a list of other 2D projections. The processing workflows enabling the Misfit analysis (data download, preprocessing and misfit) have been refactored to support their execution on the FedCloud, in addition to the current HPC resources. Moreover, the lineage and provenance services and repository have been upgraded to a later version of the S-ProvFlow API and storage system, with an improved support of the PROV data model. It has been deployed in its containerised version within SCAI resources. This also included the upgrade of the provenance exploration tools, in a version specific to the VERCE portal.

IMPACT: Simulations and raw-data staging workflows should be organised efficiently with the support of generic mechanisms to control data-reduction and transfers between HPC, data-intensive Clouds at runtime and intermediate storage sites, rapidly freeing expensive HPC storage, decreasing unnecessary idle time and accelerating delivery. Reusable workflows and deployment templates combine heterogeneous computations, taking advantage of their proximity to the storage sites.

We will evaluate the distributed deployment of the VERCE data management layer, through the adoption of the EOSC and the e-infrastructure (B2STAGE, B2SHARE, FedCloud). Similar challenges will affect the Volcanological studies community, whose main EPOS representatives have been already approached. Provenance aspects are moreover scalable to communities within and beyond EPOS. We will demonstrate how our approach favours the effective application of the FAIR principles.

RECOMMENDATIONS FOR THE IMPLEMENTATION

Scale-up and multi-architecture support offered by the EOSC computational services. Possibility for expert research-developers to develop serverless workflows, where available resources are allocated and scaled dynamically, removing the burden of configuring specific providers. Such mechanisms should be provided or brokered by the EOSC, who should also advertise to the stakeholders those tools that are compliant with such mechanisms.The reproducibility problem should be addressed structurally, scaling from ad-hoc solutions to generic services. Computational tools offered by EOSC should be aware of the existence of these services and use them offering possibilities of adaptation to the use-cases (granularity, privacy rules and visibility), and contextualisation to the user’s domain. FAIR principles should take care about attribution and privacy level for preliminary results in order to be incorporated since the early days of a particular research campaign.